In this article, we will be discussing about the second job “deploy” first. We will be here building Docker image and then scanning Docker Images using Trivy and pushing the image to ECR. Then we would be scanning Kubernetes yaml file using Terrascan and then Deploying SpringBoot Application in Minikube.

Step by Step Process

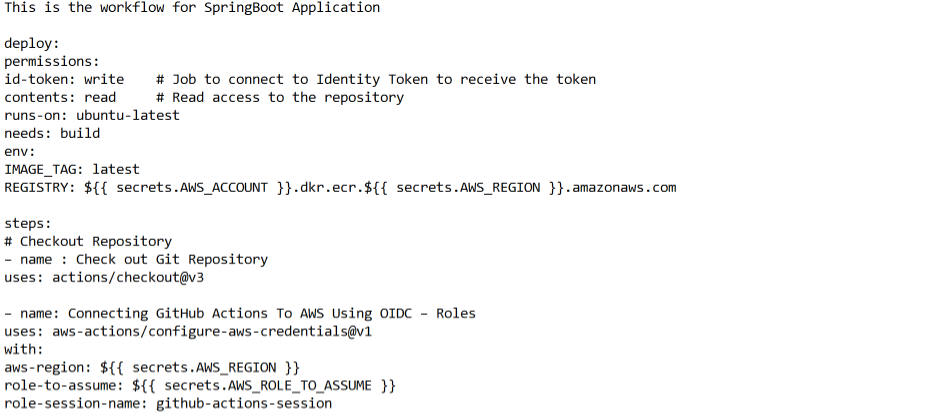

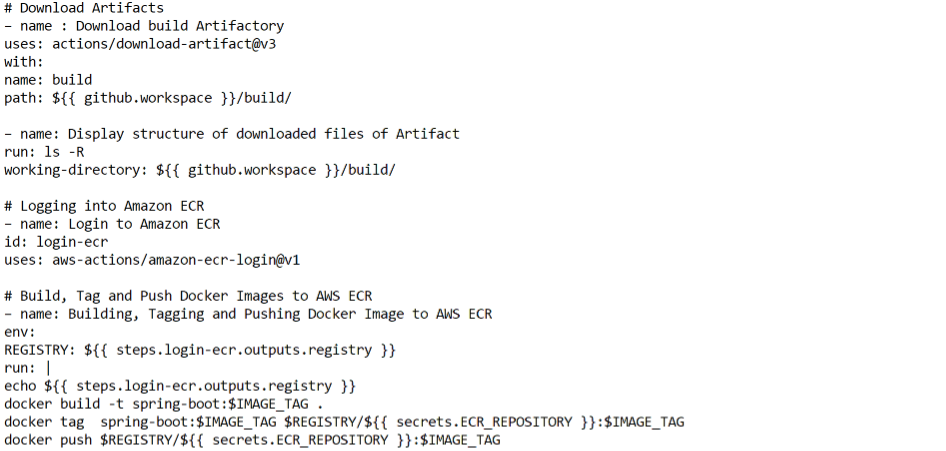

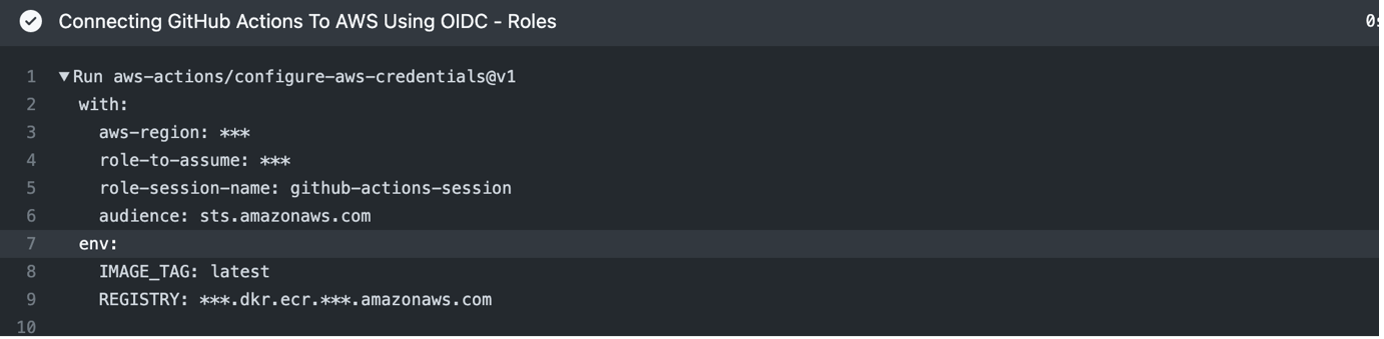

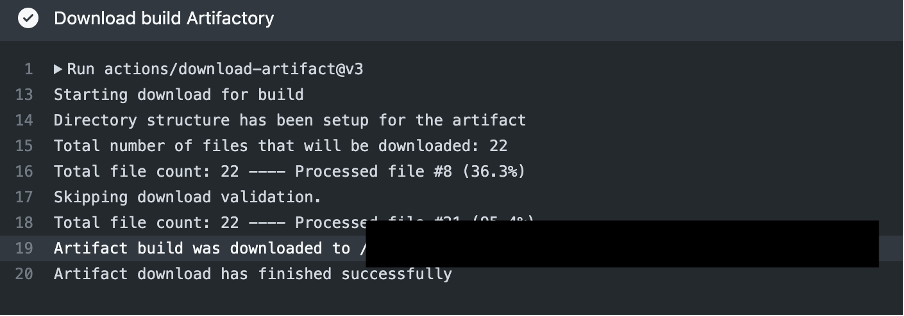

The workflow starts by checking out the repository, then it connects GitHub Actions to AWS using OIDC, allowing the workflow to assume an IAM role to access AWS resources. Then it downloads the artifacts which are built previously and displays the structure of downloaded files of the artifact.

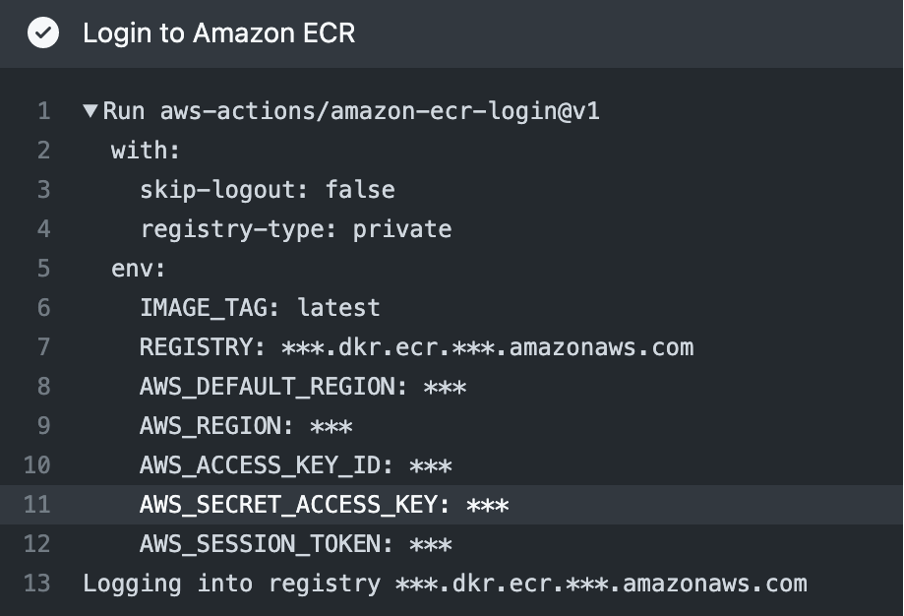

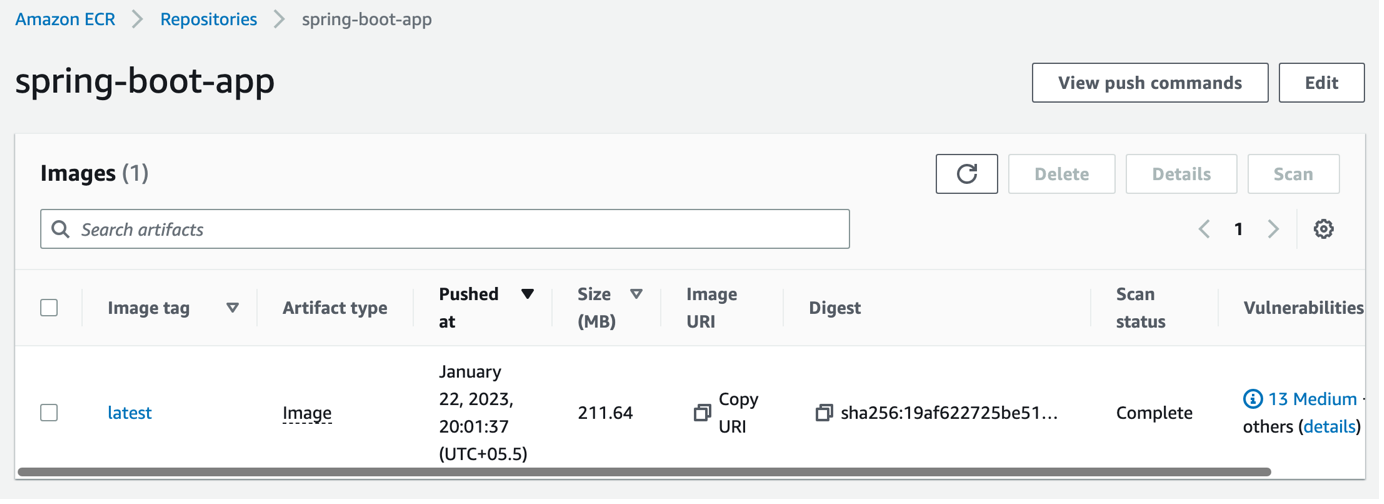

After that, the workflow logs in to Amazon ECR and uses the Docker CLI to build, tag and push the Docker image to ECR. It does this by setting the environment variable REGISTRY to the output of the login-ecr step, which is the URL of the ECR registry.

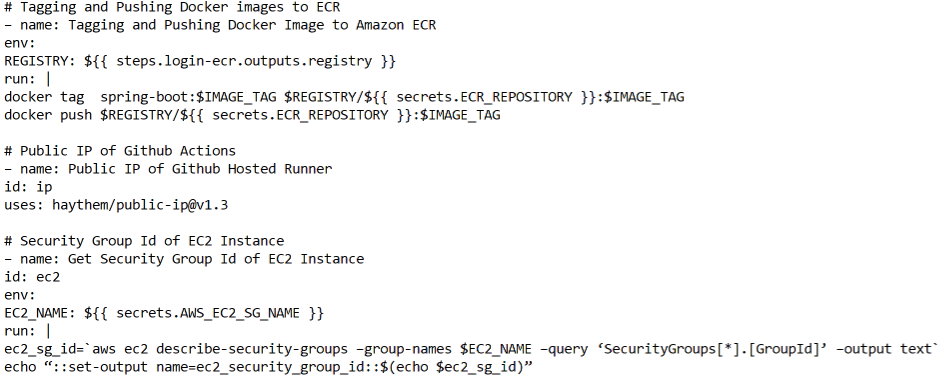

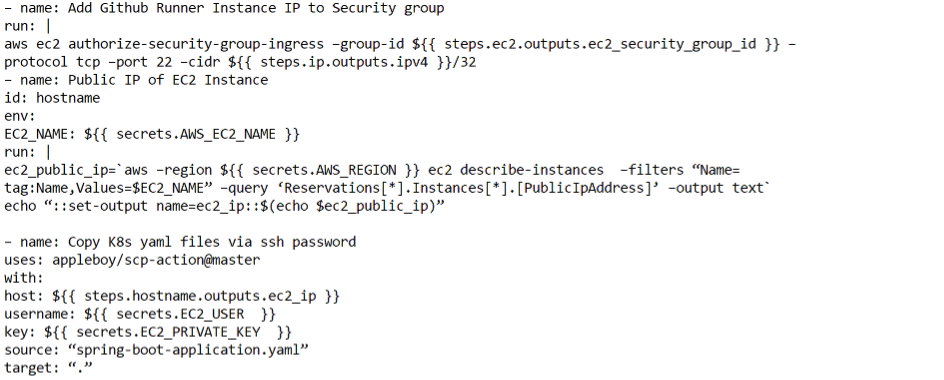

Then, the workflow gets the public IP of the GitHub Actions runner and uses the AWS CLI to get the Security Group Id of the EC2 instance, which is specified in the secrets.AWS_EC2_SG_NAME secret. Next, the workflow uses the AWS CLI to authorize the GitHub Actions runner’s IP to the security group, which allows the runner to connect to the EC2 instance.

Lastly, the workflow gets the public IP of the EC2 instance using the AWS CLI, which is specified in the secrets.AWS_EC2_NAME secret, so that the user can connect to the application in the browser.

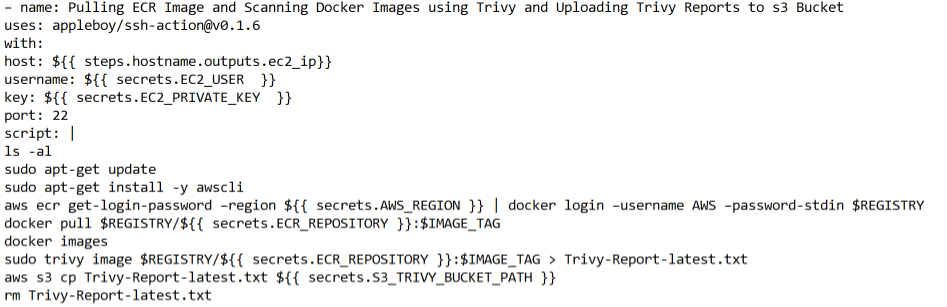

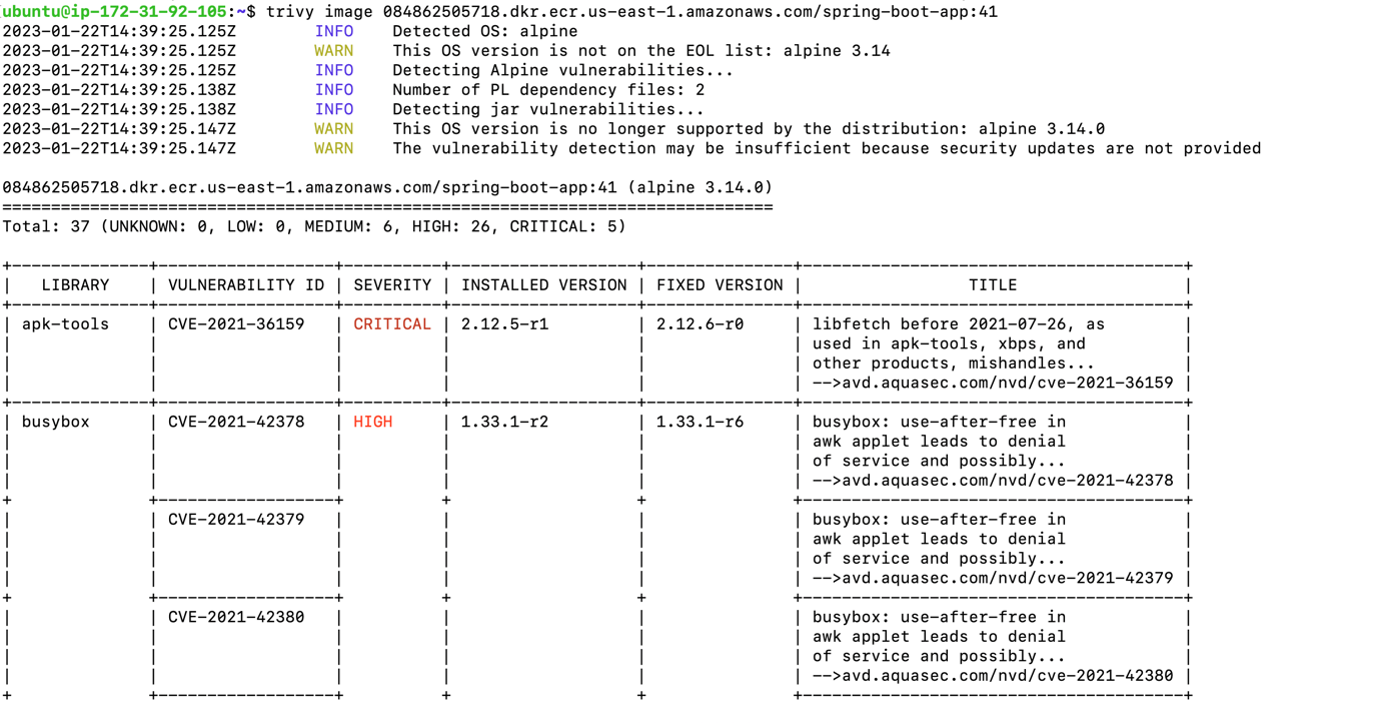

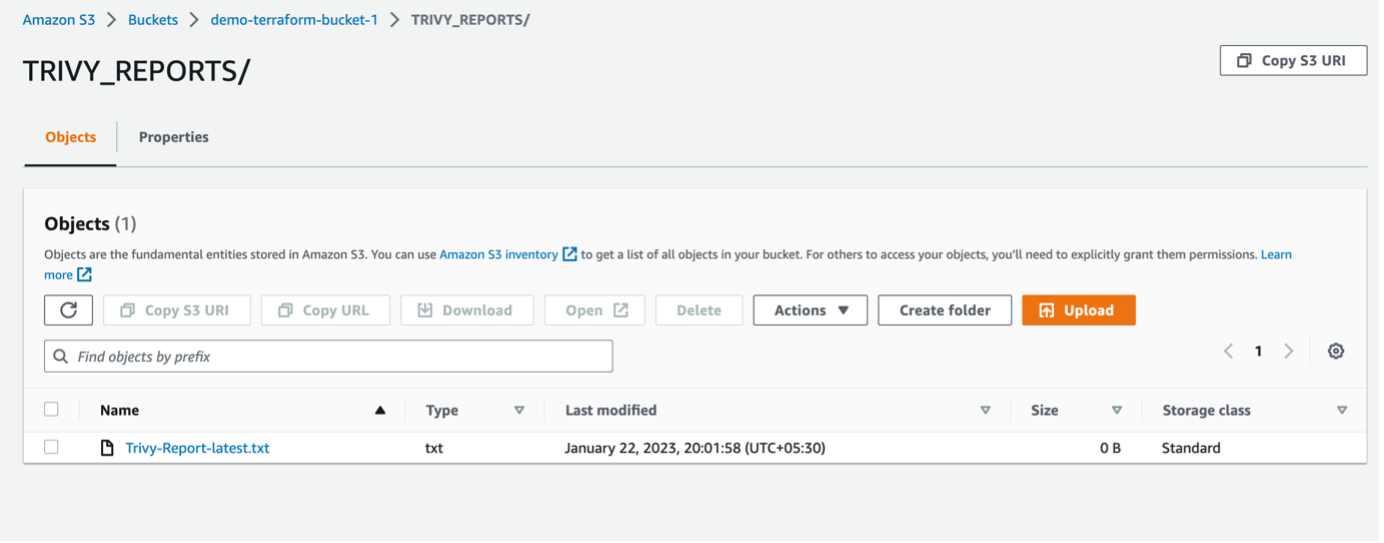

In the next step we are copying the K8s yaml file to the EC2 Instance created by terraform using ssh . After that we are pulling Docker Images from ECR and scanning images using Trivy tool and sending the Trivy reports to s3 Bucket.

Trivy Results:

Uploading Trivy Reports to s3 Bucket

In the next step we will be first scanning the K8s yaml file using Terrascan tool and upload the Terrascan reports to s3 Bucket and then we are deploying the SpringBoot Application to Minikube cluster.

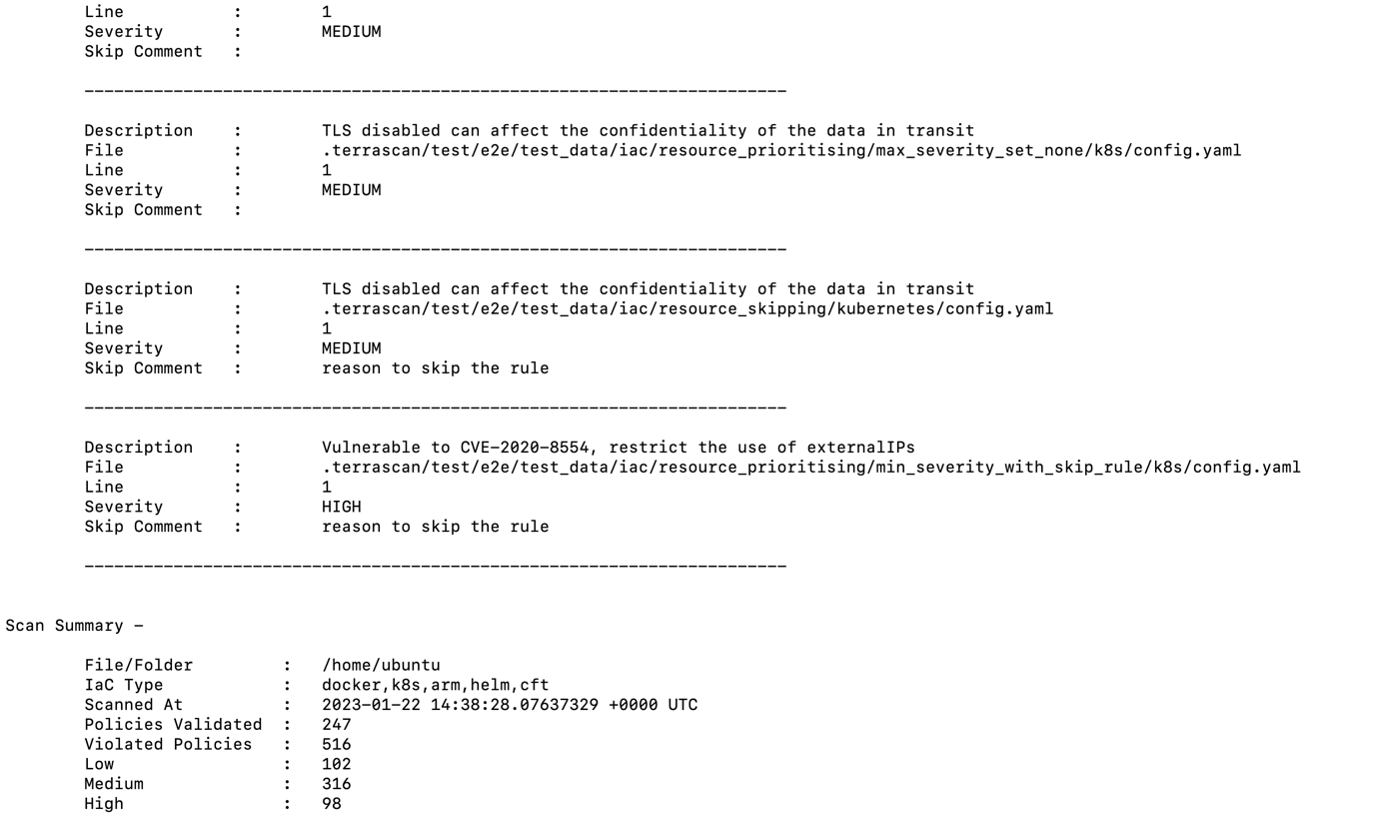

Terrascan Results

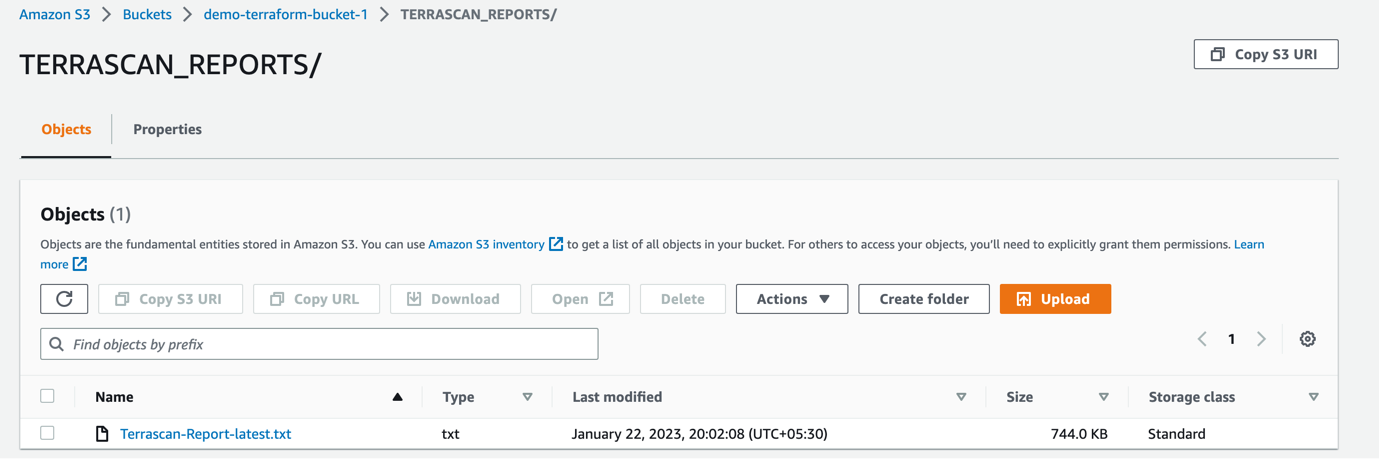

Uploading Terrascan Reports to s3 Bucket

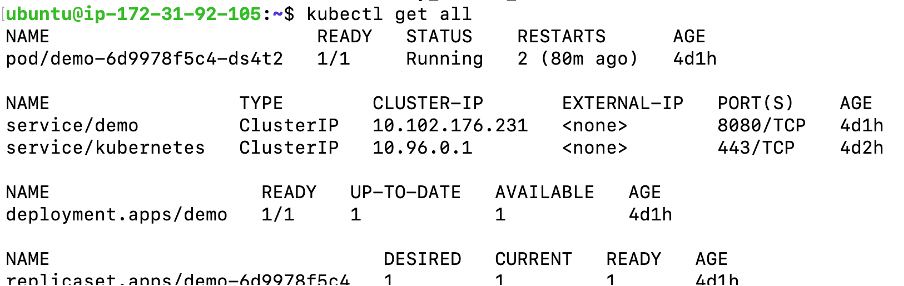

Deployed Application to Minikube cluster

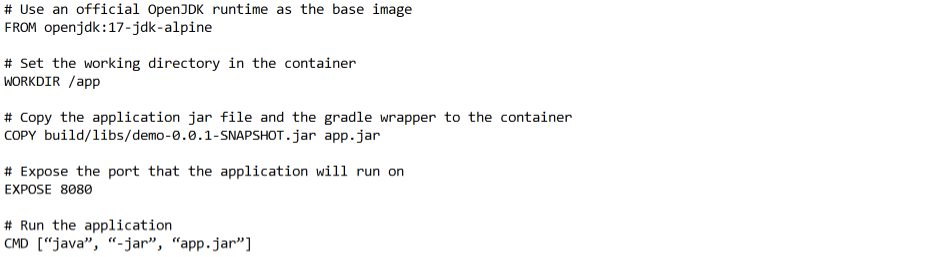

This is the Dockerfile for SpringBoot Application :

This is a Dockerfile that creates an image for a Java application. The image is based on the official OpenJDK 17 runtime, which is a lightweight version of the official Java Development Kit (JDK) that is based on Alpine Linux.

The file defines the following steps:

- FROM openjdk:17-jdk-alpine: This instruction sets the base image for the container to be the official OpenJDK 17 runtime on Alpine Linux.

- WORKDIR /app: This instruction sets the working directory for the container to be /app.

- COPY build/libs/demo-0.0.1-SNAPSHOT.jar app.jar: This instruction copies the application jar file, named demo-0.0.1-SNAPSHOT.jar, from the host machine’s build/libs directory to the container’s /app directory, and renames it to app.jar

- EXPOSE 8080: This instruction tells Docker that the container will listen on port 8080.

- CMD [“java”, “-jar”, “app.jar”]: This instruction runs the command java -jar app.jar when the container is launched. This command runs the Java application that is packaged in the app.jar file.

When you build this image and run the container, the application will be running on the port 8080 and can be accessed via localhost:8080, or the IP of the container if you are using a different environment.

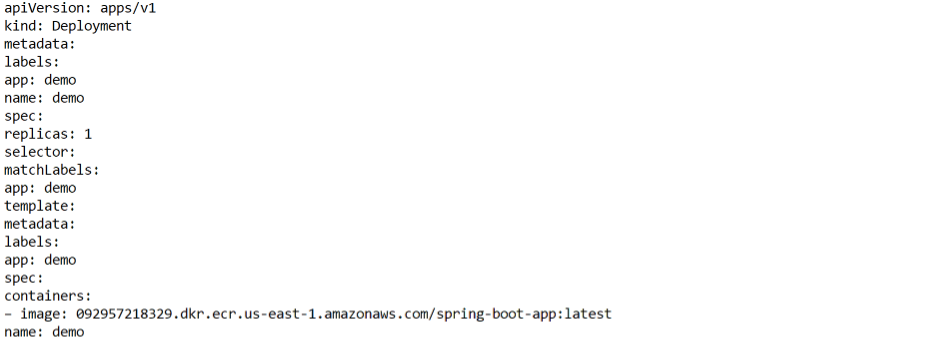

This is the K8s yaml file :

This is a Kubernetes deployment and service YAML file. The deployment defines a single replica of a container, which runs the latest version of a Spring Boot application pulled from an Amazon Elastic Container Registry (ECR) in the us-east-1 region. The container is labeled with the “app: demo” label, and the deployment and service have the same name “demo”.

The service definition creates a ClusterIP service that exposes port 8080 on the cluster and directs traffic to the target port 8080 on the containers selected by the “app: demo” label. This allows other pods within the same cluster to access the application using the service’s DNS name or IP address.

The Deployment definition consists of:

- apiVersion: apps/v1: The version of the Kubernetes API being used.

- kind: Deployment: The type of resource is Deployment.

- metadata: This section contains information about the deployment, such as its name and labels.

- labels: Key-value pairs that are used to identify and organize resources. In this case, the deployment is labeled with “app: demo”.

- name: demo: The name of the deployment, which is “demo” in this case.

- spec: This section contains the specification of the deployment.

- replicas: 1: The number of replicas of the container that should be running at any given time.

- selector: This section is used to specify the labels that are used to identify the pods that belong to this deployment.

- template: This section defines the pod template that is used to create new pods when the deployment scales up or down.

- metadata: This section contains information about the pod, such as its labels.

- spec: This section contains the specification of the pod.

- containers: This section lists the containers that are part of the pod.

- image: The container image that should be used to create the container.

- name: The name of the container.

The Service definition consists of:

- apiVersion: v1: The version of the Kubernetes API being used.

- kind: Service: The type of resource is Service.

- metadata: This section contains information about the service, such as its name and labels.

- labels: Key-value pairs that are used to identify and organize resources. In this case, the service is labeled with “app: demo”.

- name: demo: The name of the service, which is “demo” in this case.

- spec: This section contains the specification of the service.

- ports: This section lists the ports that the service should expose.

- port: 8080: The port on the host that the service should listen on.

- protocol: TCP: The protocol that the service should use.

- targetPort: 8080: The port on the pod that the service should route traffic to.

- selector: This section is used to specify the labels that are used to identify the pods that belong to this service.

- type: ClusterIP: The type of service being created. A ClusterIP service is a virtual IP that only routes traffic within the cluster and not exposed to external traffic.

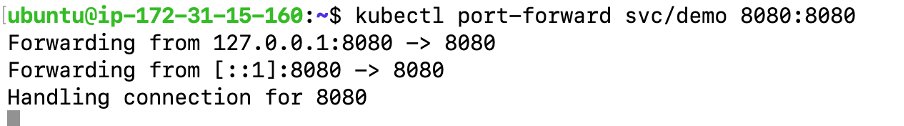

Now you need to be able to connect to the application, which you have exposed as a Service in Kubernetes. One way to do that, which works great at development time, is to create an SSH tunnel:

$ kubectl port-forward svc/demo 8080:8080

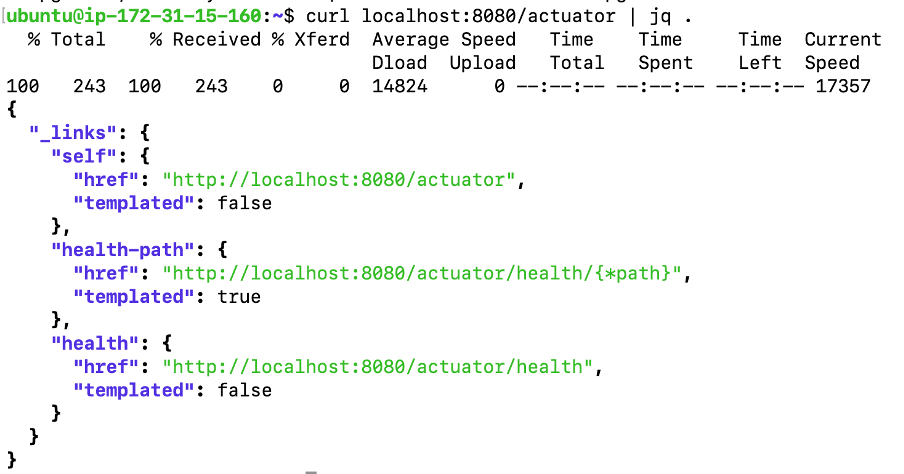

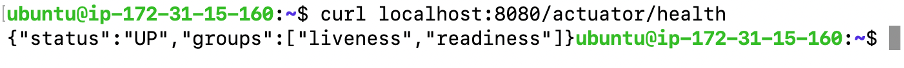

Then you can verify that the app is running in another terminal:

You can curl the endpoints by using this command :